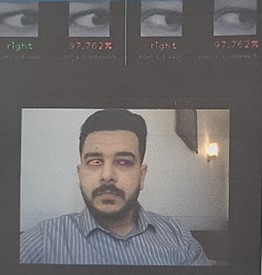

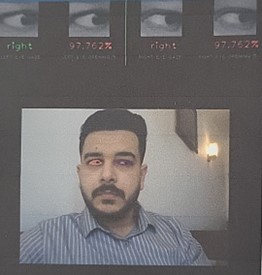

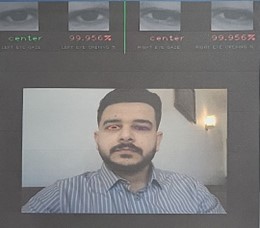

This project introduces an innovative eye-controlled communication system designed for Arabic speakers with disabilities, offering a cost-effective, simple solution to enhance social inclusion. The system tracks eye gestures and translates them into real-time Arabic phrases using a custom language model. Unlike existing technologies, it provides culturally relevant and grammatically correct communication, addressing a critical gap for Arabic speakers. The system has demonstrated 97% accuracy in gesture recognition, allowing individuals with motor impairments to communicate more independently. This groundbreaking work promises to improve the lives of non-verbal individuals across the Arab world by offering a voice and promoting greater autonomy and inclusion.

- Developer:

Ali is an AI researcher, lecturer, and engineer with a Master’s degree in Artificial Intelligence. They specialise in computer vision, deep learning, and natural language processing, with a particular focus on developing eye-based assistive systems for Arabic-speaking communities. With over three years of hands-on experience, Ali has contributed to peer-reviewed publications in IEEE, Springer, and AIP proceedings. Their research explores the integration of eye-tracking technology and deep learning for gesture recognition, aiming to advance assistive technology. Ali is passionate about using AI to enhance accessibility for individuals with physical and communication disabilities, bridging the gap between research and practical applications